Please like me

Sundays

When I woke up this morning I wrote a long to do list. Instead of working through the list I somehow ended up spending most of my day playing in R.

Scraping the web

I have been keen to try web scraping in R for a while so I gave rvest a go. I was a bit too restless to actually read any tutorials so I just started trying different commands. To be honest I think that was a mistake as I spent quite a while without anything to show for it.

The title of this blog post is not a desperate cry for attention. The Australian show “Please Like Me” is probably my favourite show ever. It made me laugh and it made me cry and one of the main characters is a super cute dog. I wanted to scrape data from IMBD and I wanted to start out simple so I tried getting a list of the characters. I already had selectorgadget (http://selectorgadget.com/) installed which I used to identify parts of the website.

PLM_IMDB <- read_html("http://www.imdb.com/title/tt2155025")

character <- html_nodes(PLM_IMDB, ".character")

html_text(character)# [1] "\n \n Josh\n 32 episodes, 2013-2016\n \n\n \n "

# [2] "\n \n Tom\n 30 episodes, 2013-2016\n \n\n \n "

# [3] "\n \n John the Dog\n 30 episodes, 2013-2016\n \n\n \n "

# [4] "\n \n Mum\n 29 episodes, 2013-2016\n \n\n \n "

# [5] "\n \n Dad\n 27 episodes, 2013-2016\n \n\n \n "

# [6] "\n \n Mae\n 22 episodes, 2013-2016\n \n\n \n "

# [7] "\n \n Hannah\n 22 episodes, 2014-2016\n \n\n \n "

# [8] "\n \n Arnold\n 22 episodes, 2014-2016\n \n\n \n "

# [9] "\n \n Claire\n 21 episodes, 2013-2016\n \n\n \n "As you can see this is quite messy and I only have 9 names even though I can see way more on the website. I tried a few different options and google and suggested I should look for the word “table”. I was sick of IMDB and turned to wikipedia. As you can see below the data frame that I get is not very tidy.

website <- read_html("https://en.wikipedia.org/wiki/List_of_Please_Like_Me_episodes")

cast <- html_nodes(website, xpath = '//*[@id="mw-content-text"]/div/table[2]')

table1 <- html_table(cast) %>%

as.data.frame()

table2 <- html_table(cast, fill=TRUE) %>%

as.data.frame()

table3 <- html_table(cast, header=TRUE) %>%

as.data.frame()

head(table1$Title,3)# [1] "\"Rhubarb and Custard\""

# [2] "Josh (Josh Thomas) is dumped by his girlfriend Claire (Caitlin Stasey) because she believes he’s gay, which he thought was just a phase. While visiting his friend Tom (Thomas Ward) at work, he meets Tom’s new attractive gay workmate Geoffrey (Wade Briggs), who asks to come to dinner. At dinner, Josh has a confrontation with Tom’s overbearing girlfriend Niamh (Nikita Leigh-Pritchard), who he wants to dump, and Geoffrey asks to stay the night. Later the two share a bed and an awkward kiss. The next morning Josh learns his mother, Rose (Debra Lawrance), has attempted suicide. Alan (David Roberts), Josh’s dad, fears it’s because of their divorce and his new girlfriend, Mae (Renee Lim), who Rose doesn’t know about. On the way to the hospital, Josh tells Tom about Geoffrey with both of them ignoring Josh’s coming out. Worried over her safety, Rose’s psychiatrist cannot release her unless she has someone at home with her. After pretending to hold it together, Josh sheds a private tear at home before resolving to move back in with his mother."

# [3] "\"French Toast\""Again, I tried a few different ways but I just couldn’t create a nice table. I was becoming more and more disillusioned so I decided to try to extract one column at a time and then combine them.

website <- read_html("https://en.wikipedia.org/wiki/List_of_Please_Like_Me_episodes")

PLM_nodes <- website %>%

html_nodes(".summary")

PLM_nodes1 <- website %>%

html_nodes(".description")

PLM_text <-html_text(PLM_nodes)

PLM_text1 <-html_text(PLM_nodes1)

PLM <- data_frame(PLM_text, PLM_text1)

names(PLM) <- c("episode_title", "description")It worked! When I had been thinking about web scraping and I always had some kind of sentiment analysis in mind. By the time I actually had some data it was pretty late so I have only scraped the surface of fun things I can do with this data but here is my first attempt.

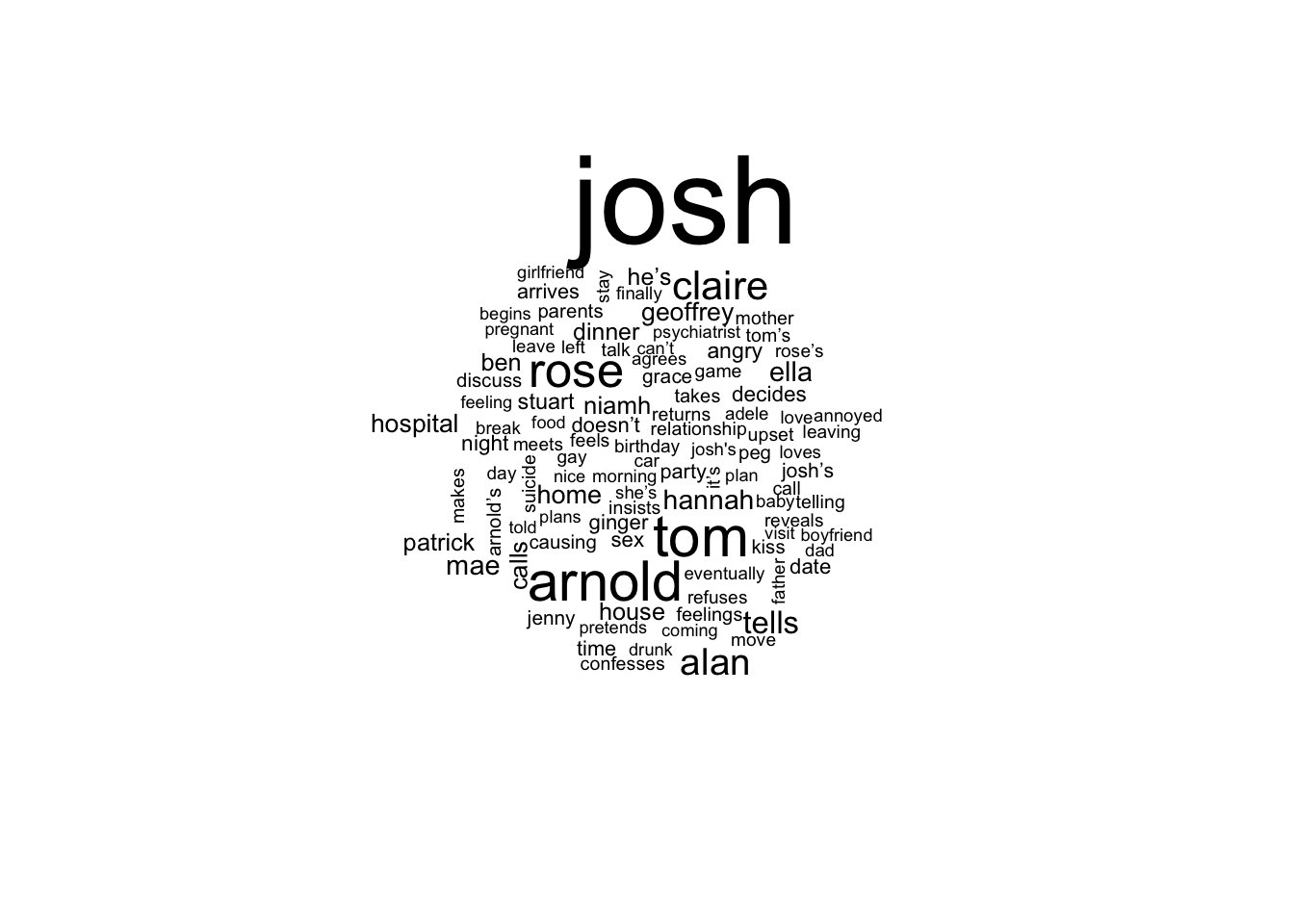

Getting that sweet word cloud

Let’s split the description variable so that each word is on a separate row and then we can look at the most common words.

tidy_PLM <- PLM %>%

unnest_tokens(output=word, description, to_lower = TRUE)

cleaned_PLM <- tidy_PLM %>%

anti_join(stop_words, by="word") # remove stop words (words that are very common)

cleaned_PLM %>%

count(word, sort = TRUE) %>%

head(10)# # A tibble: 10 x 2

# word n

# <chr> <int>

# 1 josh 252

# 2 tom 99

# 3 arnold 95

# 4 rose 78

# 5 claire 58

# 6 alan 51

# 7 tells 38

# 8 ella 29

# 9 mae 29

# 10 hannah 28Almost all of the top 10 words are character names.

One of my favourite characters is John the dog and I wanted to see how often he was mentioned and in what context. I split the data frame into sentences instead of words.

tidy_PLM_sentence <- PLM %>%

unnest_tokens(sentence, description, token = "sentences", to_lower = FALSE) %>%

mutate(john=str_detect(sentence, regex("john", ignore_case = TRUE)))

tidy_PLM_sentence %>%

filter(john==TRUE) %>%

select(sentence)# # A tibble: 3 x 1

# sentence

# <chr>

# 1 In the end, Josh spends Christmas day alone in the park eating trifle w…

# 2 At the house, Alan wanders around sadly, telling John the dog he’s gett…

# 3 With Arnold and Claire’s bad moods, Tom decides the day has come to des…I did of course create the obligatory word cloud. It probably would have been a good idea to remove the character names.

cleaned_PLM %>%

count(word) %>%

with(wordcloud(word, n, max.words = 100))

Next steps

The coded I ended up with is very different to that I set out to write. In hindsight I should have read a few rvest and tidytext tutorials as this would have saved me time. There are so many things I want to with this data. I want to add the season and episode number to the data set to see if the language changed over time. I should probably remove the character names from the data I use for the word cloud so that is shows the topics of show rather than just the names of the characters. Mostly I want to include a picture of John the dog. Since this blog is as much about the learning process as the actual results of any code I decided to publish the few successful lines of code that I wrote today.

Packages I used

library(wordcloud)

library(tidyr)

library(reshape2)

library(rvest)

library(tidytext)

library(tidyverse)